[root@master231 scheduler]# vim 01-deploy-scheduler-nodeName.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-nodename

spec:

replicas: 3

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

nodeName: worker233

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

ports:

- containerPort: 80

[root@master231 scheduler]# kubectl apply -f 01-deploy-scheduler-nodeName.yaml

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl describe pod deploy-nodename-699559557c-p2twh

Name: deploy-nodename-699559557c-p2twh

Namespace: default

Priority: 0

Node: worker233/10.0.0.233

……

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Pulled 29s kubelet Container image "registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2" already present on machine

Normal Created 29s kubelet Created container c1

Normal Started 29s kubelet Started container c1

[root@master231 scheduler]# kubectl delete -f 01-deploy-scheduler-nodeName.yaml

[root@master231 scheduler]# vim 02-deploy-scheduler-hostport.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-hostport

spec:

replicas: 3

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

ports:

- containerPort: 80

hostPort: 90

[root@master231 scheduler]# kubectl apply -f 02-deploy-scheduler-hostport.yaml

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl describe po deploy-hostport-557b7f449b-t4mbd

Name: deploy-hostport-557b7f449b-t4mbd

Namespace: default

Priority: 0

Node: <none>

Labels: app=xiu

pod-template-hash=557b7f449b

version=v1

Annotations: <none>

Status: Pending

……

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 30s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) didn't have free ports for the requested pod ports.

[root@master231 scheduler]# kubectl delete -f 02-deploy-scheduler-hostport.yaml

[root@master231 scheduler]# vim 03-deploy-scheduler-hostNetwork.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-hostnetwork

spec:

replicas: 3

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

hostNetwork: true

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

ports:

- containerPort: 80

[root@master231 scheduler]# kubectl apply -f 03-deploy-scheduler-hostNetwork.yaml

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl describe pod deploy-hostnetwork-698ddcf86d-4kjht

Name: deploy-hostnetwork-698ddcf86d-4kjht

Namespace: default

Priority: 0

Node: <none>

Labels: app=xiu

pod-template-hash=698ddcf86d

version=v1

Annotations: <none>

Status: Pending

……

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 31s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 node(s) didn't have free ports for the requested pod ports.

[root@master231 scheduler]# kubectl delete -f 03-deploy-scheduler-hostNetwork.yaml

[root@master231 scheduler]# vim 04-deploy-scheduler-resources.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-resources

spec:

replicas: 3

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

resources:

# requests:

# cpu: 200m

# memory: 200Mi

# limits:

# cpu: 0.5

# memory: 500Mi

limits:

cpu: 1

memory: 500Mi

ports:

- containerPort: 80

[root@master231 scheduler]# kubectl apply -f 04-deploy-scheduler-resources.yaml

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl describe po deploy-resources-584fc548d5-29s4w

Name: deploy-resources-584fc548d5-29s4w

Namespace: default

Priority: 0

Node: <none>

Labels: app=xiu

pod-template-hash=584fc548d5

version=v1

Annotations: <none>

Status: Pending

……

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 26s default-scheduler 0/3 nodes are available: 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 2 Insufficient cpu.

[root@master231 scheduler]# kubectl delete -f 04-deploy-scheduler-resources.yaml

[root@master231 scheduler]# kubectl get nodes --show-labels

[root@master231 scheduler]# kubectl label nodes worker232 K8S=lili

[root@master231 scheduler]# kubectl label nodes worker233 K8S=linux

[root@master231 scheduler]# kubectl get nodes --show-labels -l K8S

[root@master231 scheduler]# vim 05-deploy-scheduler-nodeSelector.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-nodeselector

spec:

replicas: 5

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

nodeSelector:

K8S: linux

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

ports:

- containerPort: 80

[root@master231 scheduler]# kubectl apply -f 05-deploy-scheduler-nodeSelector.yaml

[root@master231 scheduler]# kubectl get pods -o wide

[root@master231 scheduler]# kubectl get nodes -l K8S=linux

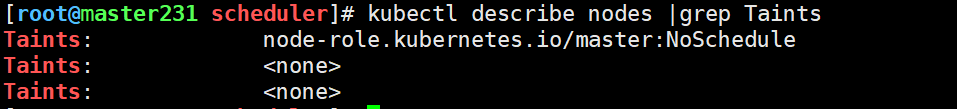

温馨提示:

表示该节点没有污点。

[root@master231 scheduler]# kubectl describe nodes |grep Taints

[root@master231 scheduler]# kubectl taint node --all K8S=lin:PreferNoSchedule

node/master231 tainted

node/worker232 tainted

node/worker233 tainted

[root@master231 scheduler]#

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

K8S=lin:PreferNoSchedule

Unschedulable: false

--

Taints: K8S=lin:PreferNoSchedule

Unschedulable: false

Lease:

--

Taints: K8S=lin:PreferNoSchedule

Unschedulable: false

Lease:

只能修改value字段,修改effect则表示创建了一个新的污点类型

[root@master231 scheduler]# kubectl taint node worker233 K8S=laonanhai:PreferNoSchedule --overwrite

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

K8S=lin:PreferNoSchedule

Unschedulable: false

--

Taints: K8S=lin:PreferNoSchedule

Unschedulable: false

Lease:

--

Taints: K8S=laonanhai:PreferNoSchedule

Unschedulable: false

Lease:

[root@master231 scheduler]# kubectl taint node --all K8S-

node/master231 untainted

node/worker232 untainted

node/worker233 untainted

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

[root@master231 scheduler]# kubectl taint node worker233 K8S:NoSchedule

node/worker233 tainted

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

--

Taints: K8S:NoSchedule

Unschedulable: false

Lease:

[root@master231 scheduler]# cat 06-deploy-scheduler-Taints.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-taints

spec:

replicas: 5

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

resources:

limits:

cpu: 1

memory: 500Mi

ports:

- containerPort: 80

[root@master231 scheduler]# kubectl apply -f 06-deploy-scheduler-Taints.yaml

deployment.apps/deploy-taints created

[root@master231 scheduler]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-taints-584fc548d5-2bdl6 0/1 Pending 0 3s <none> <none> <none> <none>

deploy-taints-584fc548d5-frd64 0/1 Pending 0 3s <none> <none> <none> <none>

deploy-taints-584fc548d5-g9vnq 1/1 Running 0 3s 10.100.1.44 worker232 <none> <none>

deploy-taints-584fc548d5-m9pjc 1/1 Running 0 3s 10.100.1.43 worker232 <none> <none>

deploy-taints-584fc548d5-sjn6c 0/1 Pending 0 3s <none> <none> <none> <none>

[root@master231 scheduler]# kubectl describe pod deploy-taints-584fc548d5-2bdl6

Name: deploy-taints-584fc548d5-2bdl6

Namespace: default

Priority: 0

Node: <none>

Labels: app=xiu

pod-template-hash=584fc548d5

version=v1

Annotations: <none>

Status: Pending

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 78s default-scheduler 0/3 nodes are available: 1 Insufficient cpu, 1 node(s) had taint {node-role.kubernetes.io/master: }, that the pod didn't tolerate, 1 node(s) had taint {K8S: }, that the pod didn't tolerate.

[root@master231 scheduler]# kubectl taint node worker233 K8S:PreferNoSchedule

node/worker233 tainted

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

--

Taints: K8S:NoSchedule

K8S:PreferNoSchedule

Unschedulable: false

[root@master231 scheduler]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-taints-584fc548d5-2bdl6 0/1 Pending 0 3m18s <none> <none> <none> <none>

deploy-taints-584fc548d5-frd64 0/1 Pending 0 3m18s <none> <none> <none> <none>

deploy-taints-584fc548d5-g9vnq 1/1 Running 0 3m18s 10.100.1.44 worker232 <none> <none>

deploy-taints-584fc548d5-m9pjc 1/1 Running 0 3m18s 10.100.1.43 worker232 <none> <none>

deploy-taints-584fc548d5-sjn6c 0/1 Pending 0 3m18s <none> <none> <none> <none>

[root@master231 scheduler]# kubectl taint node worker233 K8S:NoSchedule-

node/worker233 untainted

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

--

Taints: K8S:PreferNoSchedule

Unschedulable: false

Lease:

[root@master231 scheduler]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-taints-584fc548d5-2bdl6 1/1 Running 0 4m14s 10.100.2.208 worker233 <none> <none>

deploy-taints-584fc548d5-frd64 1/1 Running 0 4m14s 10.100.2.209 worker233 <none> <none>

deploy-taints-584fc548d5-g9vnq 1/1 Running 0 4m14s 10.100.1.44 worker232 <none> <none>

deploy-taints-584fc548d5-m9pjc 1/1 Running 0 4m14s 10.100.1.43 worker232 <none> <none>

deploy-taints-584fc548d5-sjn6c 1/1 Running 0 4m14s 10.100.2.207 worker233 <none> <none>

[root@master231 scheduler]# kubectl taint node worker233 K8S=lin:NoExecute

node/worker233 tainted

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

--

Taints: K8S=lin:NoExecute

K8S:PreferNoSchedule

Unschedulable: false

[root@master231 scheduler]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-taints-584fc548d5-fl4hz 0/1 Pending 0 8s <none> <none> <none> <none>

deploy-taints-584fc548d5-frd64 1/1 Terminating 0 7m45s 10.100.2.209 worker233 <none> <none>

deploy-taints-584fc548d5-g9vnq 1/1 Running 0 7m45s 10.100.1.44 worker232 <none> <none>

deploy-taints-584fc548d5-m9pjc 1/1 Running 0 7m45s 10.100.1.43 worker232 <none> <none>

deploy-taints-584fc548d5-qm5hl 0/1 Pending 0 8s <none> <none> <none> <none>

deploy-taints-584fc548d5-sjn6c 1/1 Terminating 0 7m45s 10.100.2.207 worker233 <none> <none>

deploy-taints-584fc548d5-v678w 0/1 Pending 0 8s <none> <none> <none> <none>

[root@master231 scheduler]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-taints-584fc548d5-fl4hz 0/1 Pending 0 14s <none> <none> <none> <none>

deploy-taints-584fc548d5-g9vnq 1/1 Running 0 7m51s 10.100.1.44 worker232 <none> <none>

deploy-taints-584fc548d5-m9pjc 1/1 Running 0 7m51s 10.100.1.43 worker232 <none> <none>

deploy-taints-584fc548d5-qm5hl 0/1 Pending 0 14s <none> <none> <none> <none>

deploy-taints-584fc548d5-v678w 0/1 Pending 0 14s <none> <none> <none> <none>

[root@master231 scheduler]# kubectl taint node worker233 K8S-

node/worker233 untainted

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

[root@master231 scheduler]# kubectl delete -f 06-deploy-scheduler-Taints.yaml

deployment.apps "deploy-taints" deleted

[root@master231 scheduler]# kubectl taint node --all K8S=lin:NoSchedule

node/master231 tainted

node/worker232 tainted

node/worker233 tainted

[root@master231 scheduler]# kubectl taint node worker233 class=linux99:NoExecute

node/worker233 tainted

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

K8S=lin:NoSchedule

Unschedulable: false

--

Taints: K8S=lin:NoSchedule

Unschedulable: false

Lease:

--

Taints: class=linux99:NoExecute

K8S=lin:NoSchedule

Unschedulable: false

[root@master231 scheduler]# cat 07-deploy-scheduler-tolerations.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-tolerations

spec:

replicas: 10

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

# 配置污点容忍

tolerations:

# 指定污点的key,如果不定义,则默认匹配所有的key。

- key: K8S

# 指定污点的value,如果不定义,则默认匹配所有的value。

value: lin

# 指定污点的effect类型,如果不定义,则默认匹配所有的effect类型。

effect: NoSchedule

- key: class

# 注意,operator表示key和value的关系,有效值为: Exists and Equal,默认值为: Equal。

# 如果只写key不写value,则表示匹配所有的value值。

operator: Exists

effect: NoExecute

- key: node-role.kubernetes.io/master

effect: NoSchedule

# 如果将operator的值设置为: Exists,且不定义key,value,effect时,表示无视污点。

#- operator: Exists

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

resources:

limits:

cpu: 1

memory: 500Mi

ports:

- containerPort: 80

[root@master231 scheduler]# kubectl apply -f 07-deploy-scheduler-tolerations.yaml

deployment.apps/deploy-tolerations created

[root@master231 scheduler]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-tolerations-74b865776b-55pqw 0/1 Pending 0 3s <none> <none> <none> <none>

deploy-tolerations-74b865776b-5gv7x 1/1 Running 0 3s 10.100.2.225 worker233 <none> <none>

deploy-tolerations-74b865776b-7kmx2 0/1 Pending 0 3s <none> <none> <none> <none>

deploy-tolerations-74b865776b-c5f8g 0/1 ContainerCreating 0 3s <none> master231 <none> <none>

deploy-tolerations-74b865776b-c6j7h 1/1 Running 0 3s 10.100.2.224 worker233 <none> <none>

deploy-tolerations-74b865776b-f8j4k 0/1 ContainerCreating 0 3s <none> master231 <none> <none>

deploy-tolerations-74b865776b-hkvll 1/1 Running 0 3s 10.100.1.60 worker232 <none> <none>

deploy-tolerations-74b865776b-j2smm 1/1 Running 0 3s 10.100.2.223 worker233 <none> <none>

deploy-tolerations-74b865776b-lbgwc 1/1 Running 0 3s 10.100.1.61 worker232 <none> <none>

deploy-tolerations-74b865776b-xchp7 0/1 ContainerCreating 0 3s <none> master231 <none> <none>

[root@master231 scheduler]# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-tolerations-74b865776b-55pqw 0/1 Pending 0 6s <none> <none> <none> <none>

deploy-tolerations-74b865776b-5gv7x 1/1 Running 0 6s 10.100.2.225 worker233 <none> <none>

deploy-tolerations-74b865776b-7kmx2 0/1 Pending 0 6s <none> <none> <none> <none>

deploy-tolerations-74b865776b-c5f8g 1/1 Running 0 6s 10.100.0.11 master231 <none> <none>

deploy-tolerations-74b865776b-c6j7h 1/1 Running 0 6s 10.100.2.224 worker233 <none> <none>

deploy-tolerations-74b865776b-f8j4k 1/1 Running 0 6s 10.100.0.10 master231 <none> <none>

deploy-tolerations-74b865776b-hkvll 1/1 Running 0 6s 10.100.1.60 worker232 <none> <none>

deploy-tolerations-74b865776b-j2smm 1/1 Running 0 6s 10.100.2.223 worker233 <none> <none>

deploy-tolerations-74b865776b-lbgwc 1/1 Running 0 6s 10.100.1.61 worker232 <none> <none>

deploy-tolerations-74b865776b-xchp7 1/1 Running 0 6s 10.100.0.12 master231 <none> <none>

[root@master231 scheduler]# kubectl describe pod deploy-tolerations-74b865776b-55pqw

Name: deploy-tolerations-74b865776b-55pqw

Namespace: default

Priority: 0

Node: <none>

Labels: app=xiu

pod-template-hash=74b865776b

version=v1

Annotations: <none>

Status: Pending

...

Containers:

c1:

Image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

Port: 80/TCP

Host Port: 0/TCP

Limits:

cpu: 1

memory: 500Mi

Requests:

cpu: 1

memory: 500Mi

...

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedScheduling 16s (x2 over 18s) default-scheduler 0/3 nodes are available: 3 Insufficient cpu.

[root@master231 scheduler]# kubectl delete -f 07-deploy-scheduler-tolerations.yaml

deployment.apps "deploy-tolerations" deleted

[root@master231 scheduler]# kubectl taint node --all K8S-

node/master231 untainted

node/worker232 untainted

node/worker233 untainted

[root@master231 scheduler]# kubectl taint node worker233 class-

node/worker233 untainted

[root@master231 scheduler]# kubectl describe nodes | grep Taints -A 2

Taints: node-role.kubernetes.io/master:NoSchedule

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

--

Taints: <none>

Unschedulable: false

Lease:

[root@master231 scheduler]# kubectl label nodes master231 K8S=yitiantian

[root@master231 scheduler]# kubectl get nodes --show-labels -l K8S |grep K8S

[root@master231 scheduler]# vim 08-deploy-scheduler-nodeAffinity.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-nodeaffinity

spec:

replicas: 5

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

# 配置Pod的粘性(亲和性)

affinity:

# 配置Pod更倾向于哪些节点进行调度,匹配条件基于节点标签实现。

nodeAffinity:

# 硬限制要求,必须满足

requiredDuringSchedulingIgnoredDuringExecution:

# 基于节点标签匹配

nodeSelectorTerms:

# 基于表达式匹配节点标签

- matchExpressions:

# 指定节点标签的key

- key: K8S

# 指定节点标签的value

values:

- linux

- yitiantian

# 指定key和values之间的关系。

operator: In

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

# resources:

# limits:

# cpu: 1

# memory: 500Mi

ports:

- containerPort: 80

[root@master231 scheduler]# kubectl apply -f 08-deploy-scheduler-nodeAffinity.yaml

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl delete -f 08-deploy-scheduler-nodeAffinity.yaml

[root@master231 scheduler]# kubectl label nodes master231 dc=jiuxianqiao

[root@master231 scheduler]# kubectl label nodes worker232 dc=lugu

[root@master231 scheduler]# kubectl label nodes worker233 dc=zhaowei

[root@master231 scheduler]# kubectl get nodes --show-labels |grep dc

[root@master231 scheduler]# cat 09-deploy-scheduler-podAffinity.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-podaffinity

spec:

replicas: 5

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

affinity:

#配置Pod的亲和信

podAffinity:

# 硬限制要求,必须满足

requiredDuringSchedulingIgnoredDuringExecution:

# 指定拓扑域的key

- topologyKey: dc

# 指定标签选择器关联Pod

labelSelector:

matchExpressions:

- key: app

values:

- xiu

operator: In

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

[root@master231 scheduler]# kubectl apply -f 09-deploy-scheduler-podAffinity.yaml

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl delete pods --all

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl label nodes master231 dc=lugu --overwrite

[root@master231 scheduler]# kubectl get nodes --show-labels |grep dc

[root@master231 scheduler]# kubectl delete pods --all

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl delete -f 09-deploy-scheduler-podAffinity.yaml

[root@master231 scheduler]# kubectl get no --show-labels |grep dc

[root@master231 scheduler]# vim 10-deploy-scheduler-podAntAffinity.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-podaffinity

spec:

replicas: 5

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

affinity:

# 配置Pod的反亲和性

podAntiAffinity:

# 硬限制要求,必须满足

requiredDuringSchedulingIgnoredDuringExecution:

# 指定拓扑域的key

- topologyKey: dc

# 指定标签选择器关联Pod

labelSelector:

matchExpressions:

- key: app

values:

- xiu

operator: In

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

[root@master231 scheduler]# kubectl apply -f 10-deploy-scheduler-podAntAffinity.yaml

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl label nodes master231 dc=jiuxianqiao --overwrite

[root@master231 scheduler]# kubectl get nodes --show-labels |grep dc

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl delete -f 10-deploy-scheduler-podAntAffinity.yaml

[root@master231 ~]# kubectl get nodes

[root@master231 ~]# kubectl cordon worker233

[root@master231 ~]# kubectl get nodes

[root@master231 ~]# kubectl describe nodes |grep Taints -A 2

[root@master231 ~]# cd /lin/manifests/scheduler/

[root@master231 scheduler]# vim 06-deploy-scheduler-Taints.yaml

[root@master231 scheduler]# cat 06-deploy-scheduler-Taints.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-taints

spec:

replicas: 5

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

resources:

limits:

cpu: 1

memory: 500Mi

ports:

- containerPort: 80

[root@master231 scheduler]# kubectl apply -f 06-deploy-scheduler-Taints.yaml

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl uncordon worker233

[root@master231 scheduler]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

master231 Ready control-plane,master 15d v1.23.17

worker232 Ready <none> 15d v1.23.17

worker233 Ready <none> 15d v1.23.17

[root@master231 scheduler]# kubectl describe nodes |grep Taints -A 2

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl get no

[root@master231 scheduler]# kubectl cordon worker232 worker233

[root@master231 scheduler]# kubectl get no

[root@master231 scheduler]# kubectl uncordon worker232 worker233

[root@master231 scheduler]# kubectl get no

[root@master231 scheduler]# vim 06-deploy-scheduler-Taints.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-taints

spec:

replicas: 5

selector:

matchLabels:

app: xiu

template:

metadata:

labels:

app: xiu

version: v1

spec:

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/lili-k8s/apps:v2

#resources:

# limits:

# cpu: 1

# memory: 500Mi

ports:

- containerPort: 80

[root@master231 scheduler]# kubectl apply -f 06-deploy-scheduler-Taints.yaml

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl get no

[root@master231 scheduler]# kubectl get po -o wide

[root@master231 scheduler]# kubectl drain worker233 --ignore-daemonsets --delete-emptydir-data

[root@master231 scheduler]# kubectl get nodes

[root@master231 scheduler]# kubectl describe nodes |grep Taints -A 2

[root@master231 scheduler]# kubectl get po -o wide