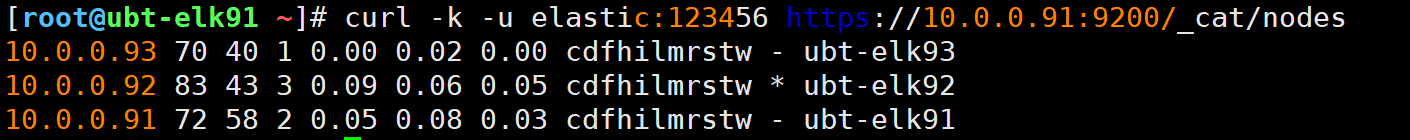

[root@ubt-elk91 ~]# curl -k -u elastic:123456 https://10.0.0.91:9200/_cat/nodes

10.0.0.93 70 40 1 0.00 0.02 0.00 cdfhilmrstw - ubt-elk93

10.0.0.92 83 43 3 0.09 0.06 0.05 cdfhilmrstw * ubt-elk92

10.0.0.91 72 58 2 0.05 0.08 0.03 cdfhilmrstw - ubt-elk91

http://10.0.0.91:5601/

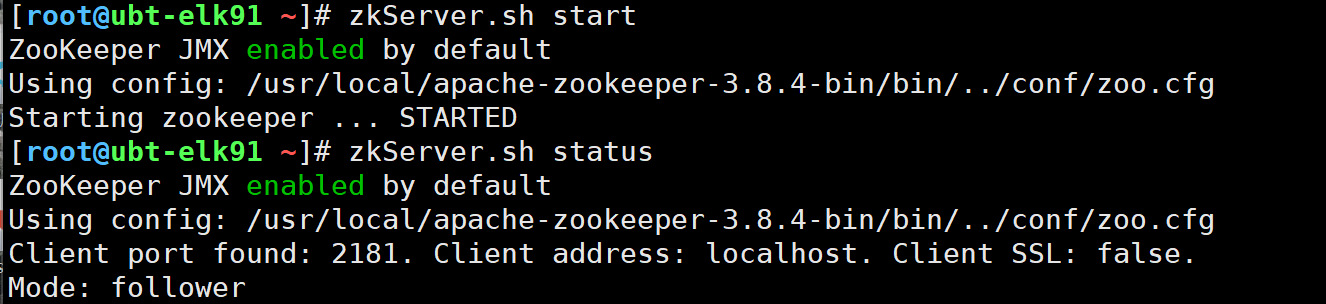

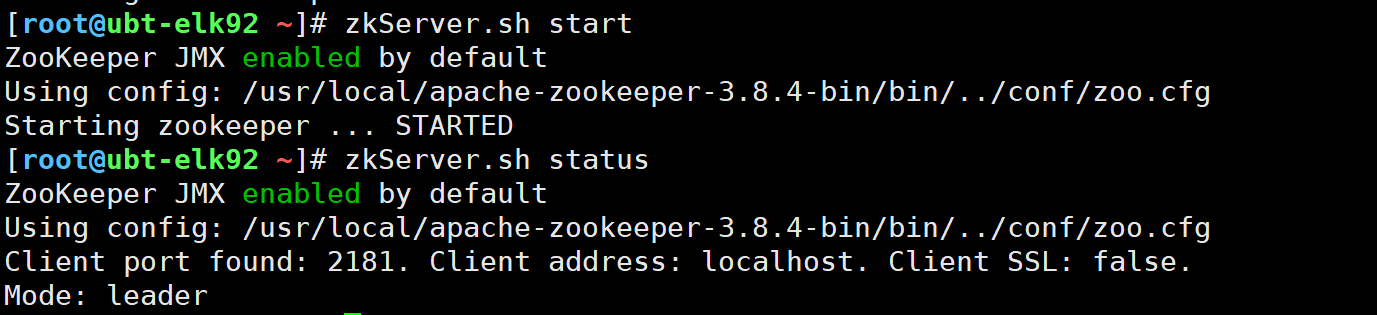

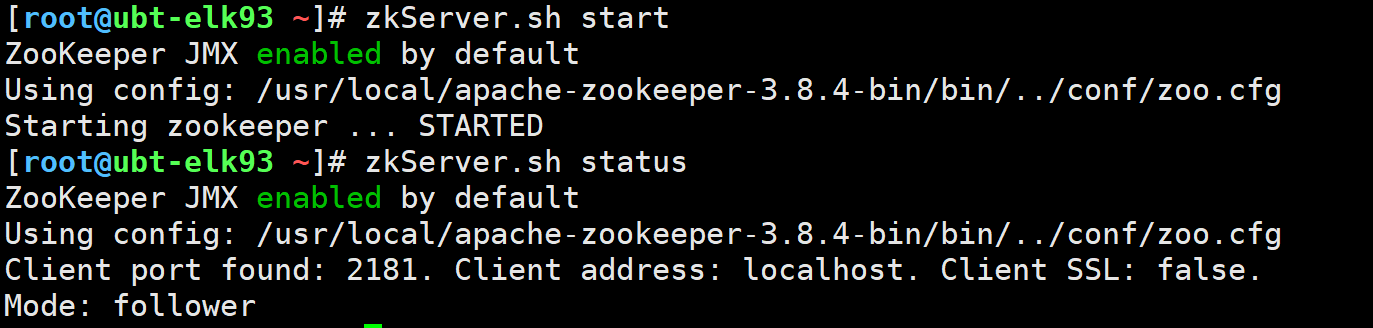

[root@ubt-elk91 ~]# zkServer.sh start

[root@ubt-elk91 ~]# zkServer.sh status

[root@ubt-elk92 ~]# zkServer.sh start

[root@ubt-elk92 ~]# zkServer.sh status

[root@ubt-elk93 ~]# zkServer.sh start

[root@ubt-elk93 ~]# zkServer.sh status

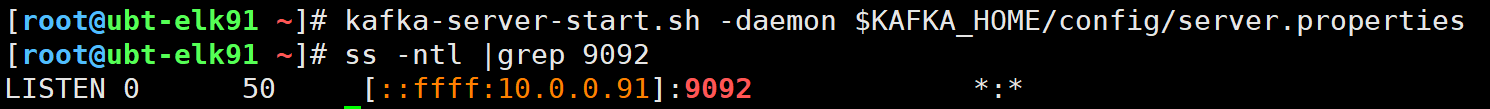

[root@ubt-elk91 ~]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties

[root@ubt-elk91 ~]# ss -ntl |grep 9092

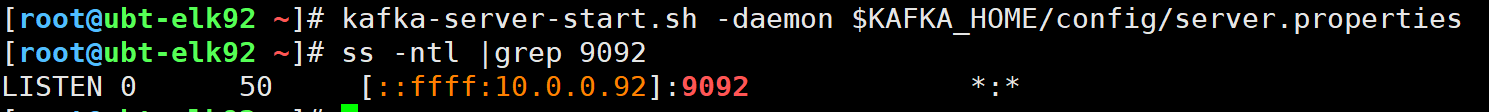

[root@ubt-elk92 ~]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties

[root@ubt-elk92 ~]# ss -ntl |grep 9092

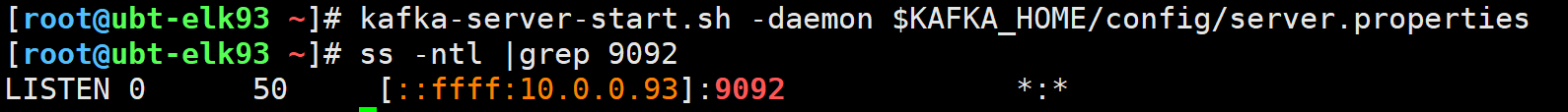

[root@ubt-elk93 ~]# kafka-server-start.sh -daemon $KAFKA_HOME/config/server.properties

[root@ubt-elk93 ~]# ss -ntl |grep 9092

[root@ubt-elk93 ~]# zkCli.sh -server 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181

Connecting to 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181

...

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: 10.0.0.91:2181,10.0.0.92:2181,10.0.0.93:2181(CONNECTED) 0] ls /kafka391/brokers/ids

[91, 92, 93]

[root@master231 elasticstack]# vim 01-sidecar-cm-ep-filebeat.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: svc-kafka

subsets:

- addresses:

- ip: 10.0.0.91

- ip: 10.0.0.92

- ip: 10.0.0.93

ports:

- port: 9092

---

apiVersion: v1

kind: Service

metadata:

name: svc-kafka

spec:

type: ClusterIP

ports:

- protocol: TCP

port: 9092

---

apiVersion: v1

kind: ConfigMap

metadata:

name: cm-filebeat

data:

main: |

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: true

output.kafka:

hosts:

- svc-kafka:9092

topic: "linux-k8s-external-kafka"

nginx.yml: |

- module: nginx

access:

enabled: true

var.paths: ["/data/access.log"]

error:

enabled: false

var.paths: ["/data/error.log"]

ingress_controller:

enabled: false

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-xiuxian

spec:

replicas: 3

selector:

matchLabels:

apps: elasticstack

version: v3

template:

metadata:

labels:

apps: elasticstack

version: v3

spec:

volumes:

- name: dt

hostPath:

path: /etc/localtime

- name: data

emptyDir: {}

- name: main

configMap:

name: cm-filebeat

items:

- key: main

path: modules-to-es.yaml

- key: nginx.yml

path: nginx.yml

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

volumeMounts:

- name: data

mountPath: /var/log/nginx

- name: dt

mountPath: /etc/localtime

- name: c2

image: harbor250.liliyy.top/filebeat/docker.elastic.co/beats/filebeat:7.17.25

volumeMounts:

- name: dt

mountPath: /etc/localtime

- name: data

mountPath: /data

- name: main

mountPath: /config/modules-to-es.yaml

subPath: modules-to-es.yaml

- name: main

mountPath: /usr/share/filebeat/modules.d/nginx.yml

subPath: nginx.yml

command:

- /bin/bash

- -c

- "filebeat -e -c /config/modules-to-es.yaml --path.data /tmp/xixi"

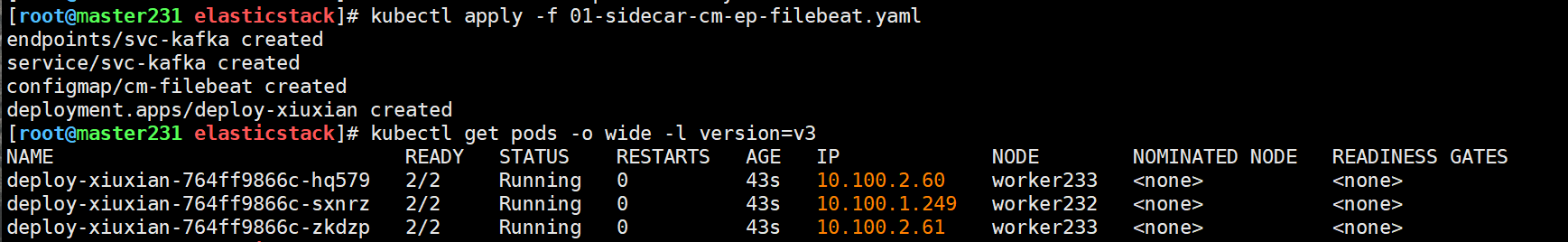

[root@master231 elasticstack]# kubectl apply -f 01-sidecar-cm-ep-filebeat.yaml

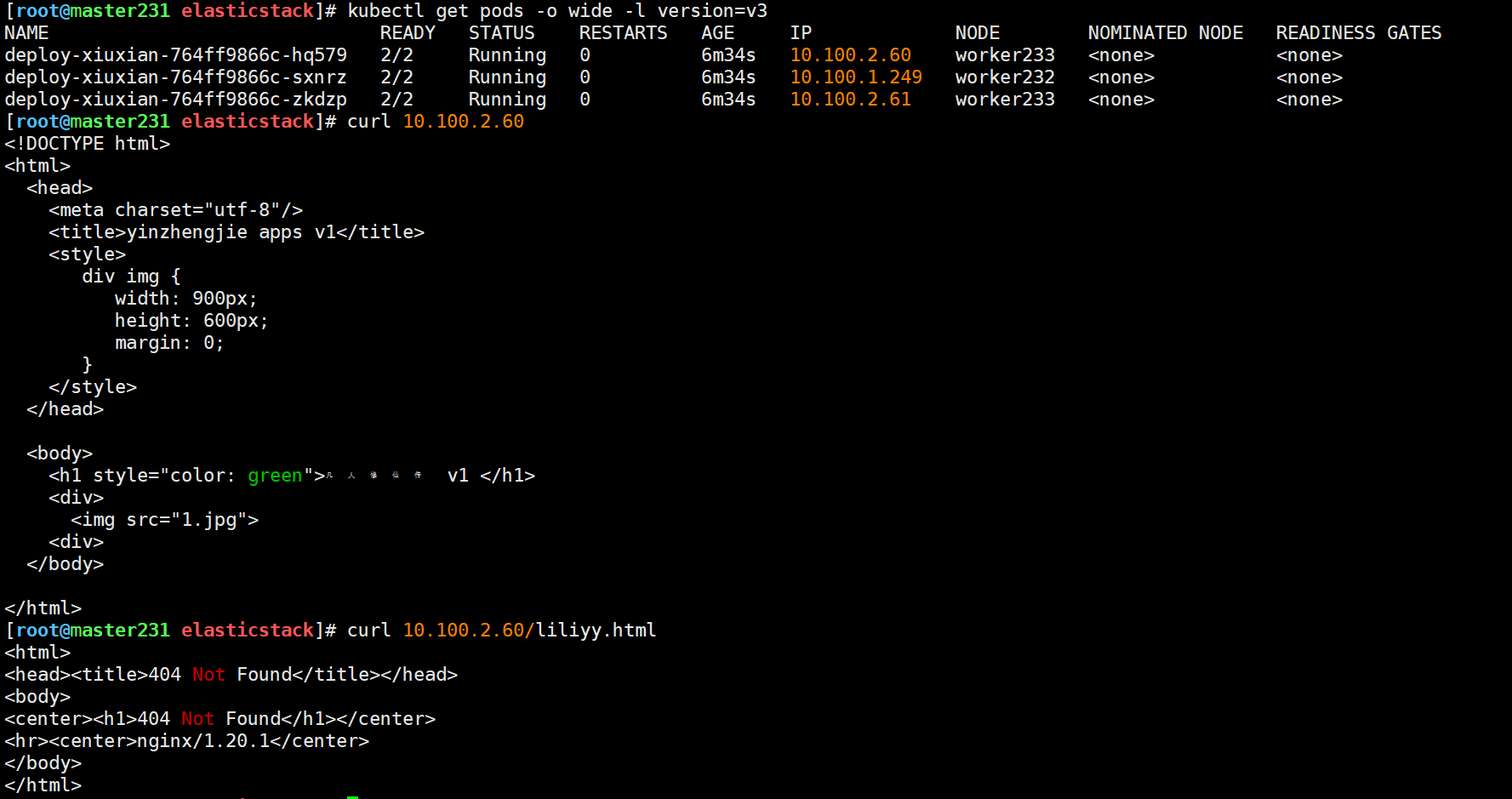

[root@master231 elasticstack]# kubectl get pods -o wide -l version=v3

进入容器查看挂载信息

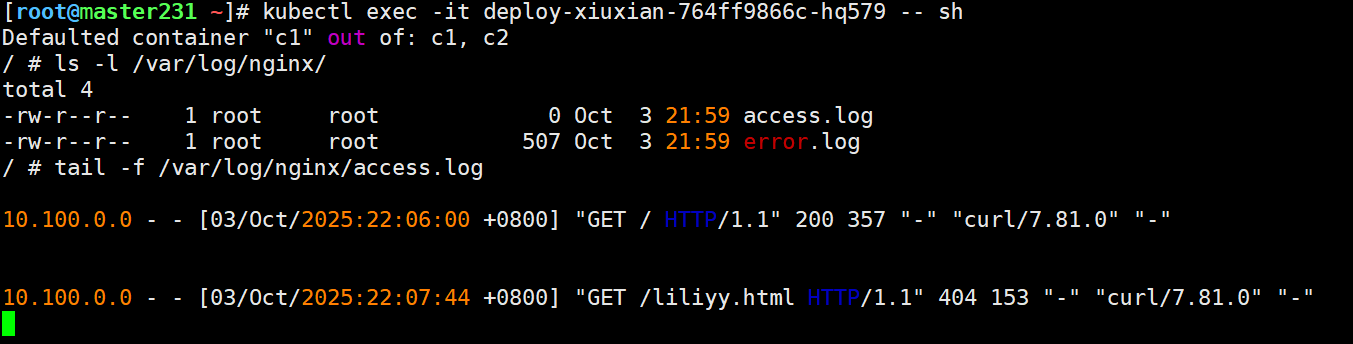

- [root@master231 ~]# kubectl exec -it deploy-xiuxian-764ff9866c-hq579 -- sh

- / # ls -l /var/log/nginx/

- / # tail -f /var/log/nginx/access.log

[root@master231 elasticstack]# kubectl get pods -o wide -l version=v3

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deploy-xiuxian-764ff9866c-hq579 2/2 Running 0 6m34s 10.100.2.60 worker233 <none> <none>

deploy-xiuxian-764ff9866c-sxnrz 2/2 Running 0 6m34s 10.100.1.249 worker232 <none> <none>

deploy-xiuxian-764ff9866c-zkdzp 2/2 Running 0 6m34s 10.100.2.61 worker233 <none> <none>

[root@master231 elasticstack]# curl 10.100.2.60

<!DOCTYPE html>

<html>

<head>

<meta charset="utf-8"/>

<title>yinzhengjie apps v1</title>

<style>

div img {

width: 900px;

height: 600px;

margin: 0;

}

</style>

</head>

<body>

<h1 style="color: green">凡人修仙传 v1 </h1>

<div>

<img src="1.jpg">

<div>

</body>

</html>

[root@master231 elasticstack]# curl 10.100.2.60/liliyy.html

<html>

<head><title>404 Not Found</title></head>

<body>

<center><h1>404 Not Found</h1></center>

<hr><center>nginx/1.20.1</center>

</body>

</html>

[root@ubt-elk92 ~]# kafka-topics.sh --bootstrap-server 10.0.0.93:9092 --list | grep kafka

[root@ubt-elk92 ~]# kafka-topics.sh --bootstrap-server 10.0.0.93:9092 --list | grep kafka

linux-k8s-external-kafka

[root@ubt-elk92 ~]# kafka-console-consumer.sh --bootstrap-server 10.0.0.93:9092 --topic linux-k8s-external-kafka --from-beginning

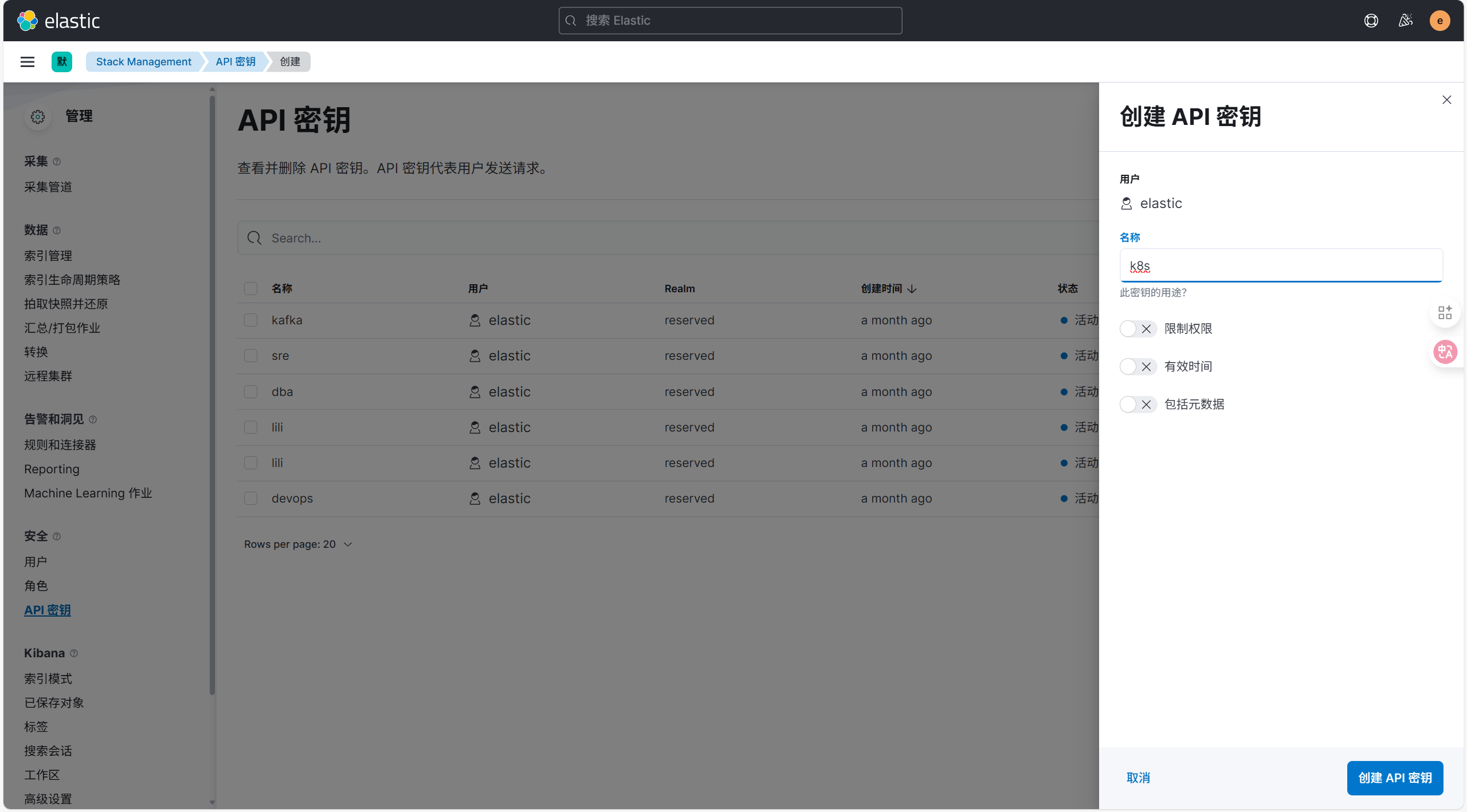

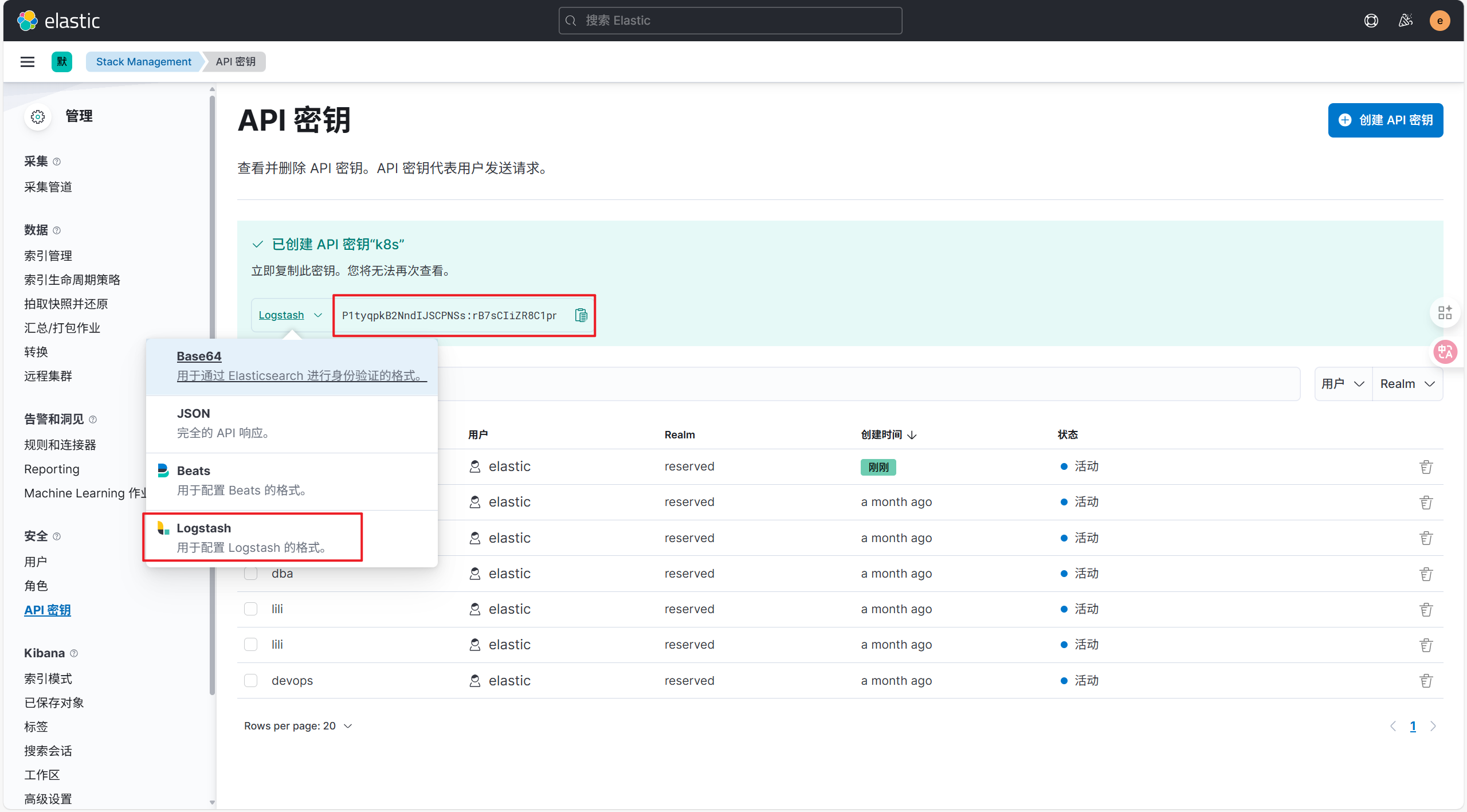

获取api-key:P1tyqpkB2NndIJSCPNSs:rB7sCIiZR8C1pn3vi3PyFw

[root@ubt-elk93 ~]# cat /etc/logstash/conf.d/11-kafka_k8s-to-es.conf

input {

kafka {

bootstrap_servers => "10.0.0.91:9092,10.0.0.92:9092,10.0.0.93:9092"

group_id => "k8s-006"

topics => ["linux-k8s-external-kafka"]

auto_offset_reset => "earliest"

}

}

filter {

json {

source => "message"

}

mutate {

remove_field => [ "tags","input","agent","@version","ecs" , "log", "host"]

}

grok {

match => {

"message" => "%{HTTPD_COMMONLOG}"

}

}

geoip {

source => "clientip"

database => "/root/GeoLite2-City_20250311/GeoLite2-City.mmdb"

default_database_type => "City"

}

date {

match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ]

}

useragent {

source => "message"

target => "linux99-useragent"

}

}

output {

# stdout {

# codec => rubydebug

# }

elasticsearch {

hosts => ["https://10.0.0.91:9200","https://10.0.0.92:9200","https://10.0.0.93:9200"]

index => "logstash-kafka-k8s-%{+YYYY.MM.dd}"

api_key => "P1tyqpkB2NndIJSCPNSs:rB7sCIiZR8C1pn3vi3PyFw"

ssl => true

ssl_certificate_verification => false

}

}

http://10.0.0.91:5601/

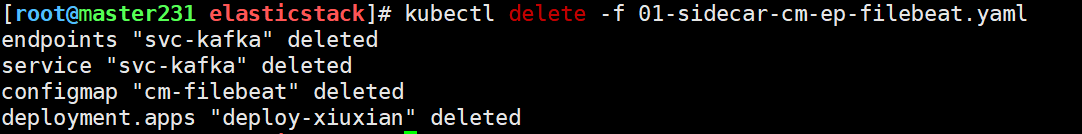

[root@master231 elasticstack]# kubectl delete -f 01-sidecar-cm-ep-filebeat.yaml

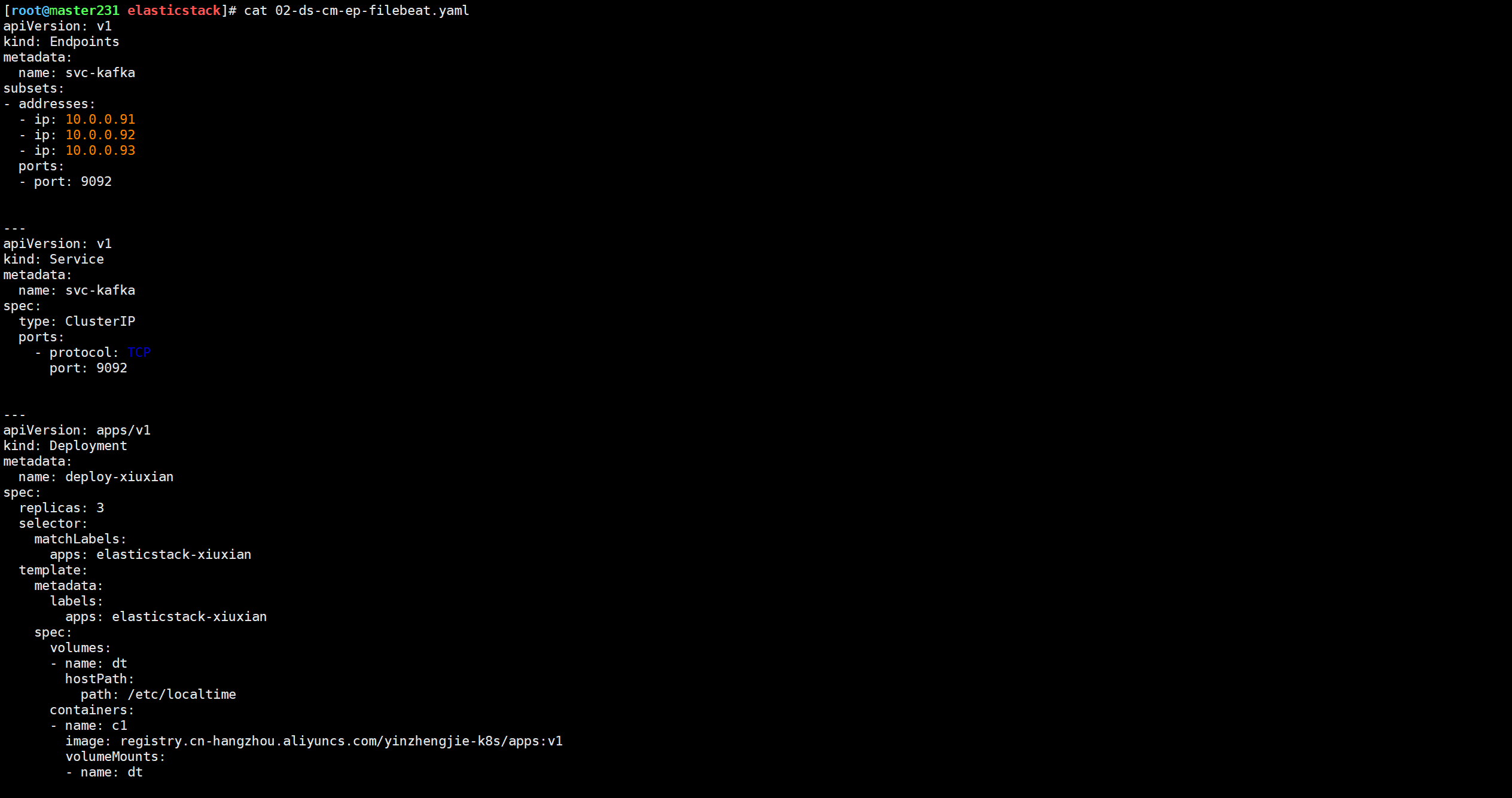

[root@master231 elasticstack]# cat 02-ds-cm-ep-filebeat.yaml

apiVersion: v1

kind: Endpoints

metadata:

name: svc-kafka

subsets:

- addresses:

- ip: 10.0.0.91

- ip: 10.0.0.92

- ip: 10.0.0.93

ports:

- port: 9092

---

apiVersion: v1

kind: Service

metadata:

name: svc-kafka

spec:

type: ClusterIP

ports:

- protocol: TCP

port: 9092

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: deploy-xiuxian

spec:

replicas: 3

selector:

matchLabels:

apps: elasticstack-xiuxian

template:

metadata:

labels:

apps: elasticstack-xiuxian

spec:

volumes:

- name: dt

hostPath:

path: /etc/localtime

containers:

- name: c1

image: registry.cn-hangzhou.aliyuncs.com/yinzhengjie-k8s/apps:v1

volumeMounts:

- name: dt

mountPath: /etc/localtime

---

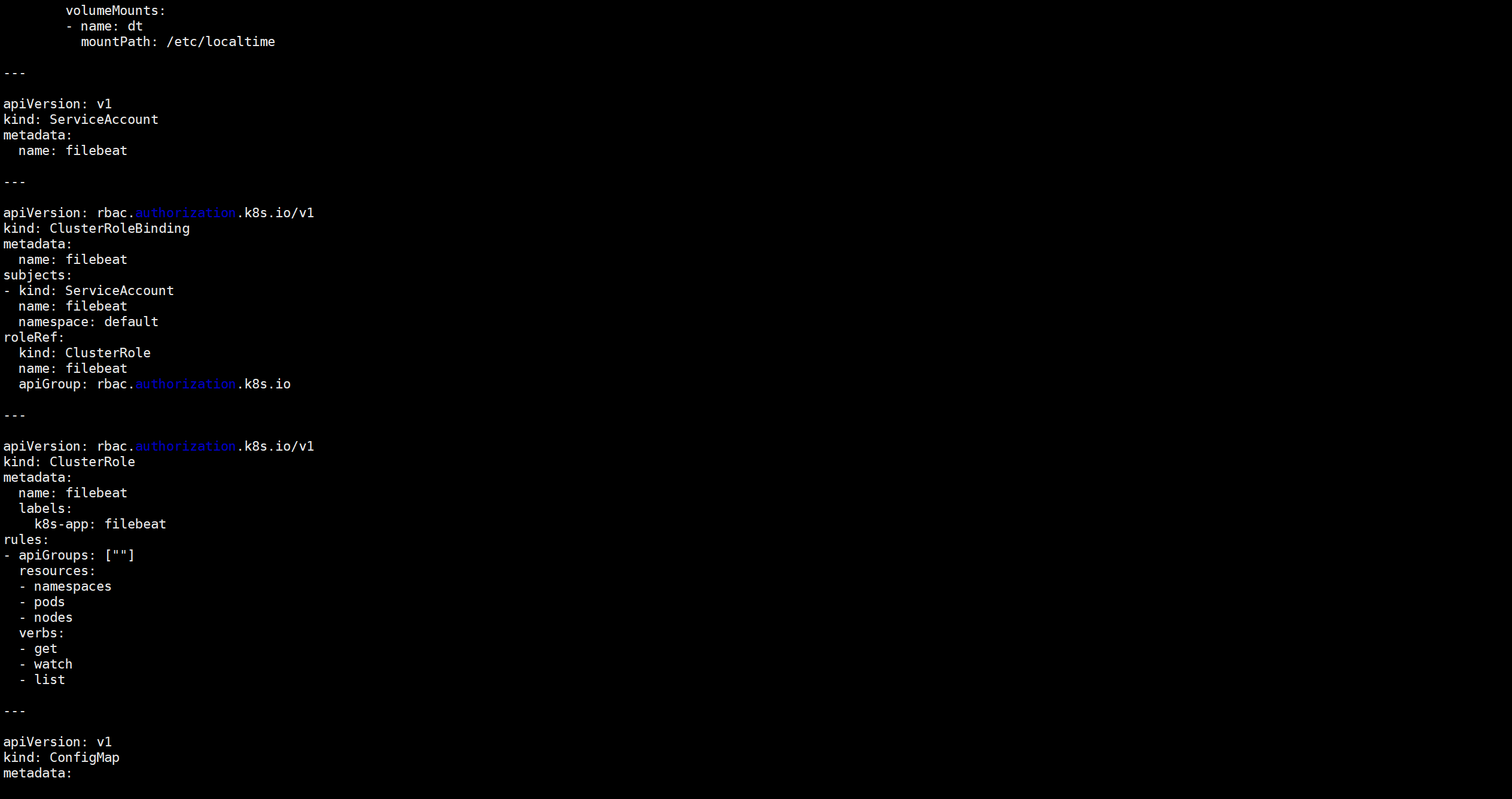

apiVersion: v1

kind: ServiceAccount

metadata:

name: filebeat

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: filebeat

subjects:

- kind: ServiceAccount

name: filebeat

namespace: default

roleRef:

kind: ClusterRole

name: filebeat

apiGroup: rbac.authorization.k8s.io

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: filebeat

labels:

k8s-app: filebeat

rules:

- apiGroups: [""]

resources:

- namespaces

- pods

- nodes

verbs:

- get

- watch

- list

---

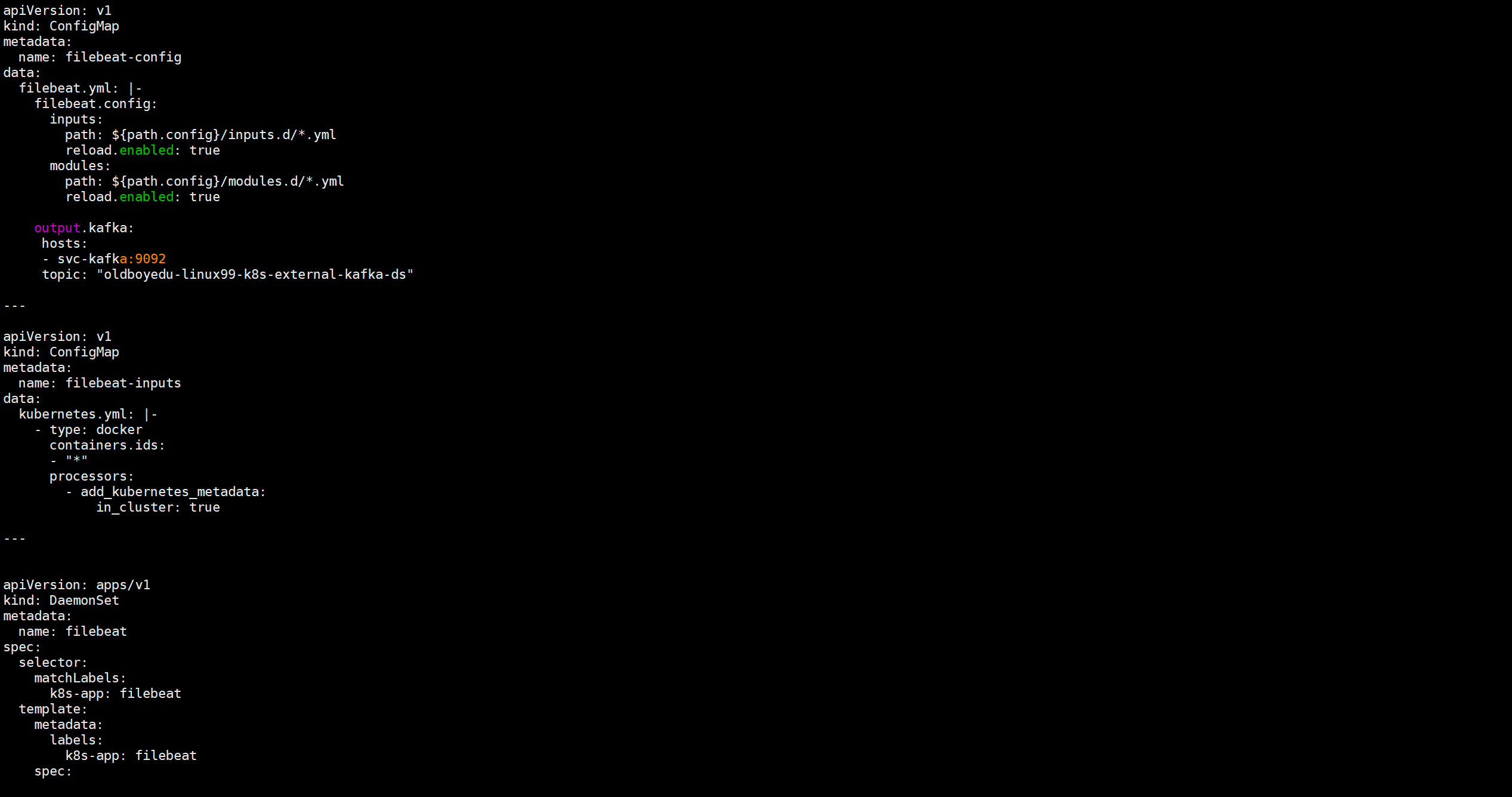

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-config

data:

filebeat.yml: |-

filebeat.config:

inputs:

path: ${path.config}/inputs.d/*.yml

reload.enabled: true

modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: true

output.kafka:

hosts:

- svc-kafka:9092

topic: "linux-k8s-external-kafka-ds"

---

apiVersion: v1

kind: ConfigMap

metadata:

name: filebeat-inputs

data:

kubernetes.yml: |-

- type: docker

containers.ids:

- "*"

processors:

- add_kubernetes_metadata:

in_cluster: true

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: filebeat

spec:

selector:

matchLabels:

k8s-app: filebeat

template:

metadata:

labels:

k8s-app: filebeat

spec:

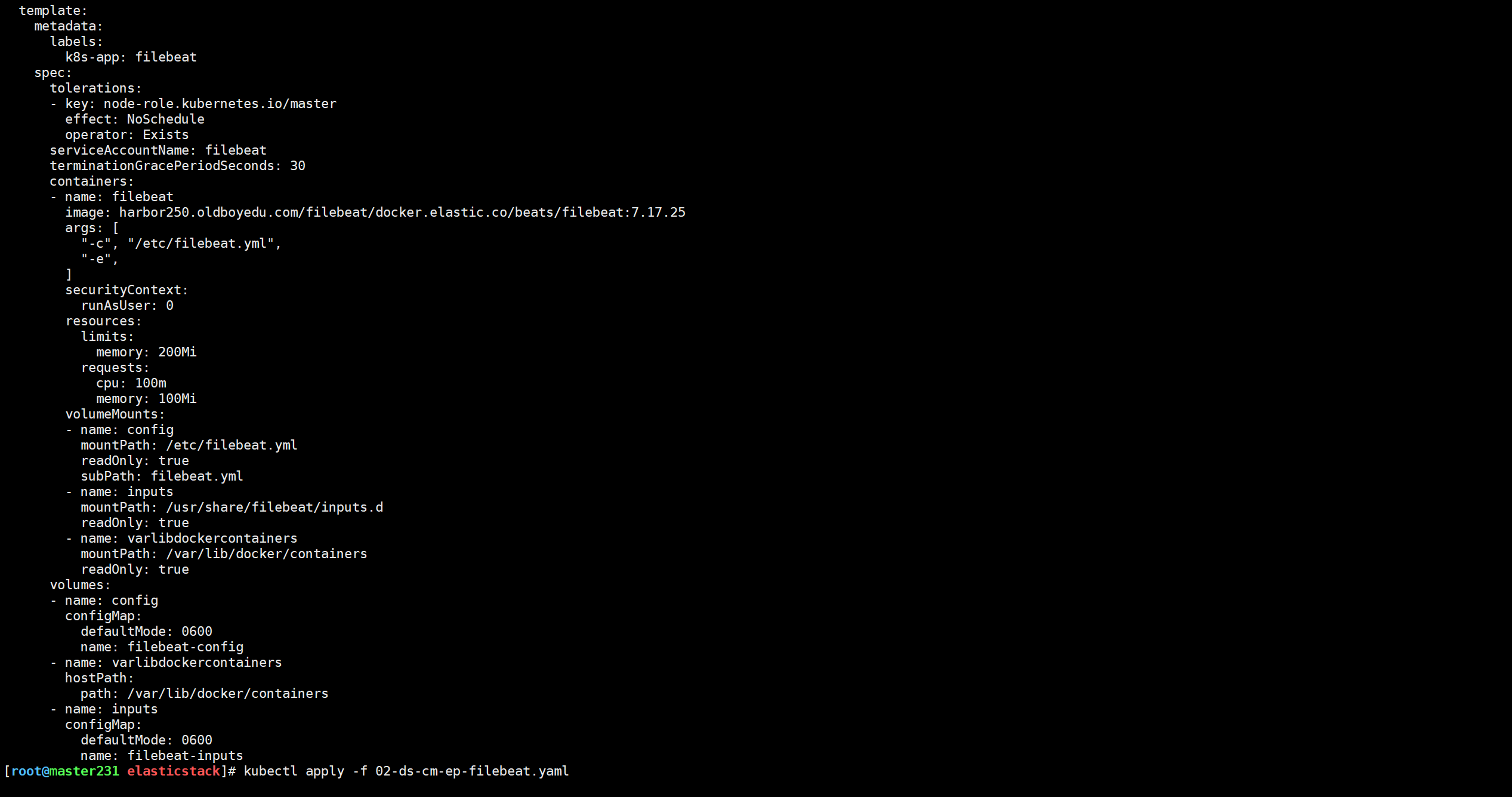

tolerations:

- key: node-role.kubernetes.io/master

effect: NoSchedule

operator: Exists

serviceAccountName: filebeat

terminationGracePeriodSeconds: 30

containers:

- name: filebeat

image: harbor250.liliyy.top/filebeat/docker.elastic.co/beats/filebeat:7.17.25

args: [

"-c", "/etc/filebeat.yml",

"-e",

]

securityContext:

runAsUser: 0

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 100Mi

volumeMounts:

- name: config

mountPath: /etc/filebeat.yml

readOnly: true

subPath: filebeat.yml

- name: inputs

mountPath: /usr/share/filebeat/inputs.d

readOnly: true

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

volumes:

- name: config

configMap:

defaultMode: 0600

name: filebeat-config

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containers

- name: inputs

configMap:

defaultMode: 0600

name: filebeat-inputs

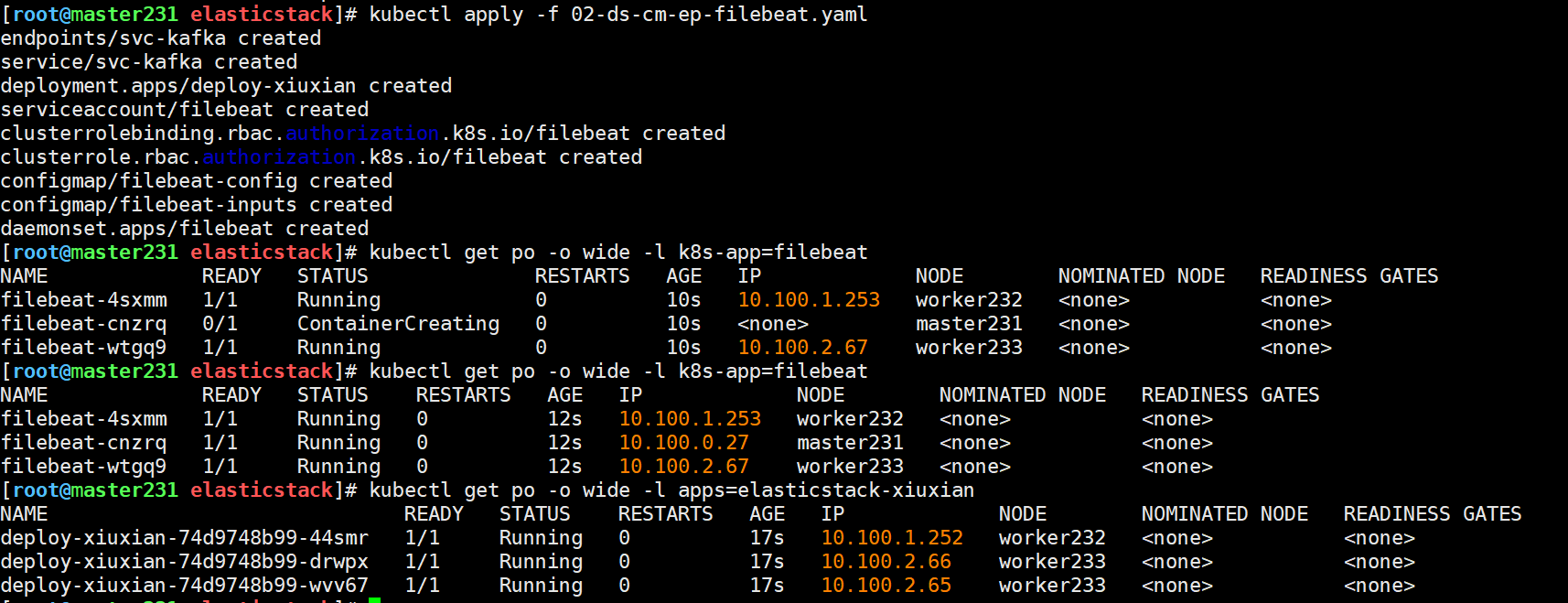

[root@master231 elasticstack]# kubectl apply -f 02-ds-cm-ep-filebeat.yaml

[root@master231 elasticstack]# kubectl get po -o wide -l k8s-app=filebeat

[root@master231 elasticstack]# kubectl get po -o wide -l k8s-app=filebeat

[root@master231 elasticstack]# kubectl get po -o wide -l apps=elasticstack-xiuxian

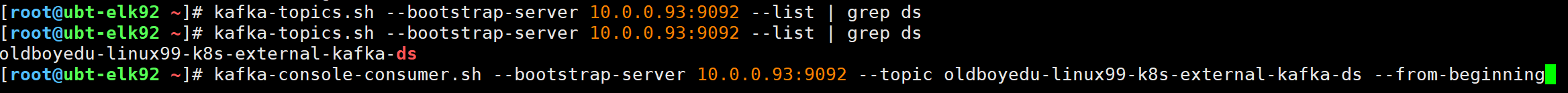

[root@elk92 ~]# kafka-topics.sh --bootstrap-server 10.0.0.93:9092 --list | grep ds

linux-k8s-external-kafka-ds

[root@elk92 ~]# kafka-console-consumer.sh --bootstrap-server 10.0.0.93:9092 --topic linux-k8s-external-kafka-ds --from-beginning

获取api-key:P1tyqpkB2NndIJSCPNSs:rB7sCIiZR8C1pn3vi3PyFw

[root@ubt-elk93 ~]# vim /etc/logstash/conf.d/12-kafka_k8s_ds-to-es.conf

input {

kafka {

bootstrap_servers => "10.0.0.91:9092,10.0.0.92:9092,10.0.0.93:9092"

group_id => "k8s-001"

topics => ["linux-k8s-external-kafka-ds"]

auto_offset_reset => "earliest"

}

}

filter {

json {

source => "message"

}

mutate {

remove_field => [ "tags","input","agent","@version","ecs" , "log", "host"]

}

grok {

match => {

"message" => "%{HTTPD_COMMONLOG}"

}

}

geoip {

source => "clientip"

database => "/root/GeoLite2-City_20250311/GeoLite2-City.mmdb"

default_database_type => "City"

}

date {

match => [ "timestamp", "dd/MMM/yyyy:HH:mm:ss Z" ]

}

useragent {

source => "message"

target => "linux-useragent"

}

}

output {

# stdout {

# codec => rubydebug

# }

elasticsearch {

hosts => ["https://10.0.0.91:9200","https://10.0.0.92:9200","https://10.0.0.93:9200"]

index => "logstash-kafka-k8s-ds-%{+YYYY.MM.dd}"

api_key => "P1tyqpkB2NndIJSCPNSs:rB7sCIiZR8C1pn3vi3PyFw"

ssl => true

ssl_certificate_verification => false

}

}

[root@ubt-elk93 ~]# logstash -rf /etc/logstash/conf.d/12-kafka_k8s_ds-to-es.conf

[root@ubt-elk92 ~]# kafka-topics.sh --bootstrap-server 10.0.0.93:9092 --list | grep ds

linux-k8s-external-kafka-ds

[root@ubt-elk92 ~]# kafka-console-consumer.sh --bootstrap-server 10.0.0.93:9092 --topic linux-k8s-external-kafka-ds --from-beginning

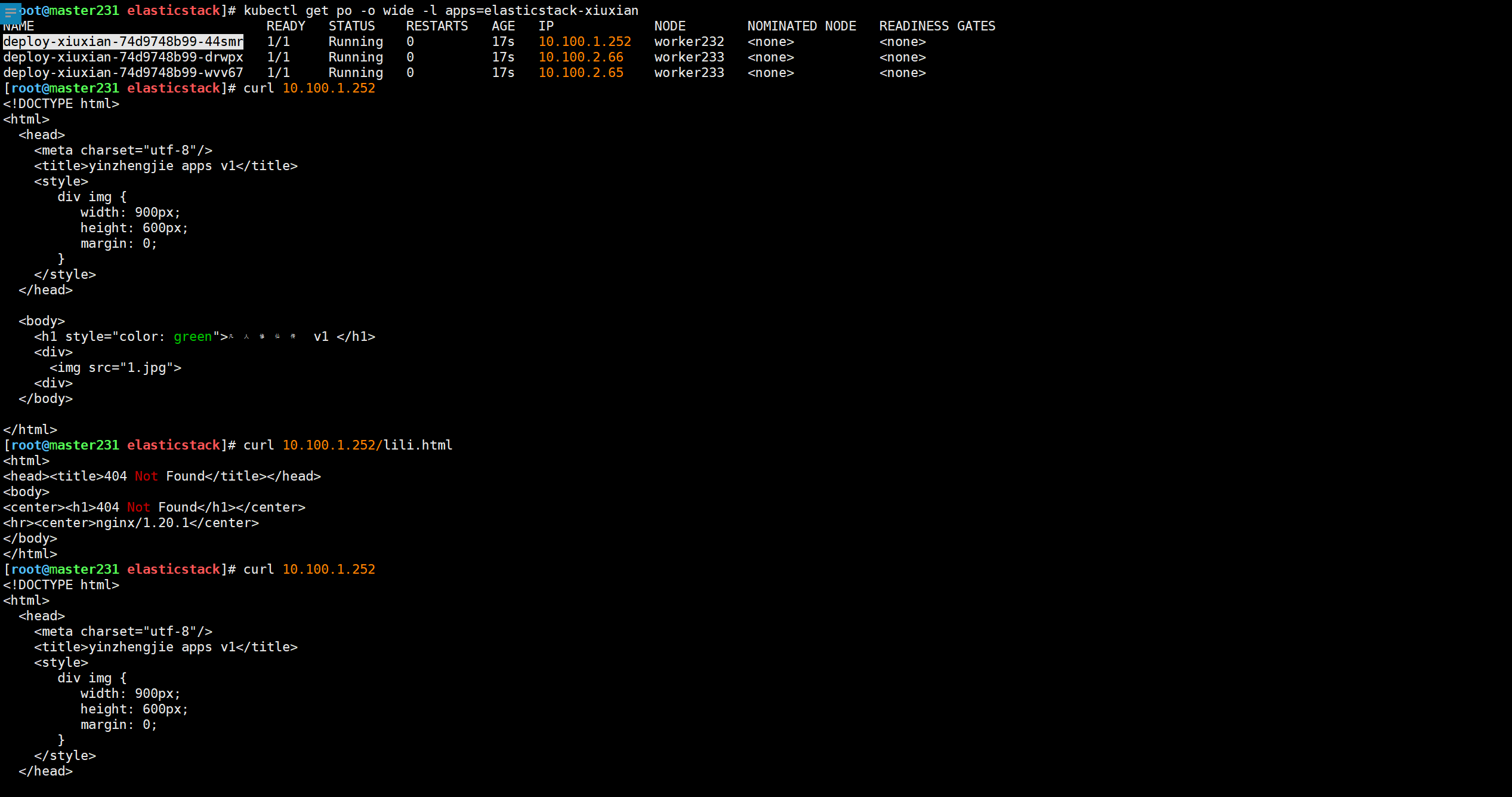

[root@master231 elasticstack]# curl 10.100.1.252

[root@master231 elasticstack]# curl 10.100.1.252/lili.html

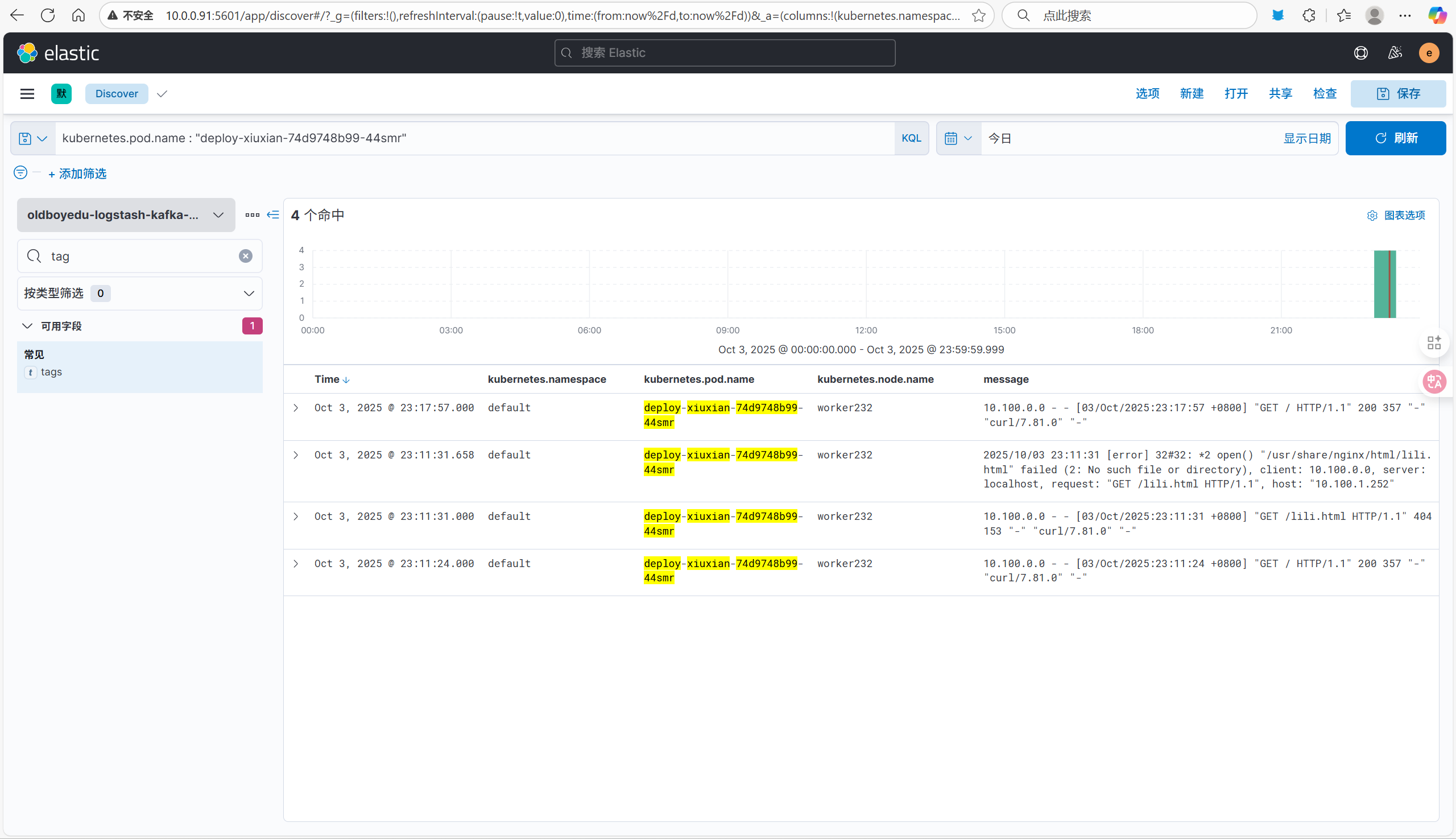

kubernetes.pod.name : "deploy-xiuxian-74d9748b99-44smr"